Not storing (almost) the same file twice

Reduce Data Science Storage Costs by 90%

De-duplicating Data Storage II: because sometimes bureaucracy gets in the way

In De-duplicating Data Storage I, I showed a technique for reducing the storage load on a system, where the system encounters multiple copies of the same file. This is a fairly common situation in data-intensive environments as Data Scientists make copies of the dataset they are working on, and their colleague is working on, and they shared with their friend. Applying the techniques, we showed a 36% increase in profits in a laboratory scenario.

There is another behaviour that is very common: Data Revisions.

Naturally, as time progresses, our source datasets change, resulting in changes to our output datasets. While most people think of keeping all data forever, for the most part, we are only concerned with the current state. However, FOMO keeps us from removing now historic, but redundant datasets. Over time this data continuously grows,

I’m going to share a way to reduce this cost by 90% over 3 years.

An example from my past was a heat map of financial transactions across Canada. This dataset was based on the last two years of financial data aggregated at the Postal Code level. To place the items on the map, we had a second dataset of financial districts and a third set that consisted of postal codes and their corresponding financial district. Just to add a layer of complexity, Canada Post changes their postal delivery routes regularly, and therefore their postal codes, also the boundaries of our financial districts changed regularly. This means that the proportion of a Postal Code, that resides within the Financial District is not consistent and required constant updating from a third-party provider.

The network drive folder looked something like this:

name size

—————————————————————————————————————

/proj/1/dashboard.html 12 KB

/proj/1/transactions.tns 50 B

/proj/1/postalcodes.csv 33 MB [ref]

/proj/1/districts.gml 37 MB [ref]

—————————————————————————————————————

70 MBSo we have three datasets: our live financial data (a live connection), a listing of postal codes, and a listing of location shapes. The relationship between the datasets and the final aggregate that is displayed on the dashboard can be described as

select

d.label,

d.shape,

sum(p.weight * t.amt) as amt

from

transactions t

inner join postal p on f.postal_code = p.code

inner join districts d on d.id = p.district_idWhile the actual financial summary is updated in real-time, we receive updated districts and postal codes every quarter. A fairly normal practice is that every quarter, we receive an email with a link to a CSV, and someone has to go download the file and overwrite the current CSV files, however it is considered a best practice to make a copy of the old files in an archive folder. This is done by creating a copy and date-stamping it.

name size

—————————————————————————————————————

/proj/1/

dashboard.html 12 KB

districts.gml 37 MB

postalcodes.csv 33 MB

arch/

districts.202203.csv 37 MB

districts.202204.csv 37 MB

postalcodes.202203.csv 33 MB

postalcodes.202204.csv 33 MB

—————————————————————————————————————

Total: 210 MBI’m sure many people recognise this pattern of file management, as it is very common. It is also easy to see how this can spiral out of control.

notice that this is proj/1 of … let’s say about 100 active projects

these projects have been running longer than 2 months (average of 5 years)

corporate policy requires redundant backups of the drive space, with point-in-time recovery capabilities (daily for a month, monthly for 10 years)

Given these approximations, we have quickly consumed 210MB (and that is a very small project) of data per project, with 60 months of user copies, on slow archival disks with 150 disk snapshots, and 100 projects. A total of 180 TB. The policies and project volumes are all realistic, in fact, I suspect I’m underestimating the storage demand. Assuming AWS S3 Standard storage of the files ($0.021/GB), this costs roughly $ 70,000 CAD/year. (please, check my math)

It’s not my money … why should I care?

There were two events that made me care:

some users were denied access to the service because there were concerns about disk consumption and cost, and their business case write-up did not persuade the executives: a significant loss to the organisation.

a different user asked for the recovery of a single file, from an historic checkpoint. The backup team informed him they would need to “find a disk big enough to restore the point in time to”. The backup team needed to restore the entirety of all the projects to get one file: a significant expense to the organisation.

These are demonstrations of bureaucracy getting in the way. It stems from a poor understanding of digital storage and information management techniques on both the part of the IT department as well as the Data Scientists.

Sometimes we need to keep stuff moving, even when bureaucracy gets in the way.

A Quick History Lesson

The disk usage pattern in question is a well-established and intuitive one, usually developed by students in their first year of college. Changes made to complex systems can result in unanticipated outcomes, it is also not always obvious which change (or combination of changes) led to the behaviour; having old copies can help you to understand what has gone wrong.

Having identified only the portions of a file that have changed, the basic problem becomes very recognisable to most programmers (actually most publishers of content) as a Change Management or Version Control. Over the decades, several tools have evolved to manage this problem called Version Control Systems (VCS). While the field is littered with VCS, there are a few prominent ones that represent significant changes in the way changes are thought of and managed

CVS (1985) — changes to individual files are tracked independently

SVN (2000) — related changes to multiple files are considered a single unit

Git (2005) — collections of changes are able to be managed as separate units

These three tools represent important changes in the understanding of the way in which the databases we store revisions of data should be structured.

In a large volume of data, it is important to be able to see the differences between instances. Asking “what has changed”, in large volumes of data, can be very difficult. Difference tools ( diffing) became standard in the data and programming toolkit (diff) in the mid-70s. Further, tools like patch offer a way to transmit (or store) only the changes which may be significantly smaller than an entire copy. There are now a plethora of graphical tools for such tasks.

Techniques to Reduce the Problem

These are well-established problems, but with some best practices that have evolved.

Compression

While not the focus of this article, compression is an easy and often overlooked solution to the problem. From a solution architecture, perspective, I am often glad it is overlooked. While putting the files in a zip archive is an easy solution, it does reduce the visibility of the changes: files need to be decompressed before they can be compared. Compression should be maintained inside the solution and abstracted from the user.

Having users compress their files actually reduces the ability of tools to take other actions that may have a larger impact

Right-Scoped Repositories

In our initial problem description, the IT team had difficulty restoring the backups because they needed to restore the entire repository to a point in time.

Rather than creating backups of the entire repository (which is measured in petabytes for our example), it may be more sensible to break the problem down into sub-parts. Many of our projects will get archived over time, projects change at different rates, and usage may decline or increase. This means that some data will have a greater or lesser probability of requiring a restore from the point in time.

Dividing the backups at a “per-project” level offers an obvious point of division. This allows us to strike a balance between the ease of backing up “instances” with the ease of restoring smaller instances to help the user.

Only Store Changes

As discussed, tools like diff and patch offer a means to identify, store, and apply changes to larger files. Rather than storing multiple copies of the dataset, it is possible to store a primary dataset, and then track the series of changes that have taken place on it.

For example, country lists change regularly, requiring an update for even a spelling change. A CSV based on the Wikipedia page for ISO country codes could be modified with a patch description to accommodate Hungary’s name change in 2012.

This is significantly smaller than storing the entire file of hundreds of countries for a single name change. As a tip, the primary should be the one you are actually using, and the changes can work backwards in time.

@@ -117,1 +117,1 @@

-Hungary,Hungary,UN member state,HU,HUN,348,.hu

+Hungary,Republic of Hungary,UN member state,HU,HUN,348,.huThis is significantly smaller than storing the entire file of hundreds of countries for a single name change. As a tip, the primary should be the one you are actually using, and the changes can work backwards in time.

A Solution

Rather than manually perform all of these steps, these are the problems that modern VCS applications were developed to solve. As an odd quirk of history, subversion (SVN) is particularly well suited to handling large files as it only tracks the differences between states.

While I would recommend any VCS solution as an improvement over file copies, SVN is ideally suited to the purposes of Data Analyst’s management of large datasets.

Take our original problem of a project storage system with archived folders

name size

—————————————————————————————————————

/proj/1/

schema.json 128 B

dashboard-template.html 232 KB

dashboard.html 100 MB

districts.csv 37 MB

postalcodes.csv 33 MB

arch/

dashboard.20220301.html 100 MB

dashboard.20220315.html 100 MB

dashboard.20220401.html 100 MB

dashboard.20220415.html 100 MB

districts.202203.csv 37 MB

districts.202204.csv 37 MB

postalcodes.202203.csv 33 MB

postalcodes.202204.csv 33 MB

—————————————————————————————————————I have modified the example to include output historic reports built from a template.

Rather than trying to retrofit, let us start over (with project #2).

One of the very first improvements we can make to the storage structure is to create an independent archive location for each project. This independent location can then be turned into an SVN-controlled location.

mkdir -p /arch/2;

cd /arch/2;

svnadmin create;Now we can link the archive location to the working location.

mkdir -p /proj/2;

cd /proj/2;

svn checkout file:///arch/2 .;Once this is done, we can begin to create our space and apply the changes as we make them.

name size

—————————————————————————————————————

/proj/2/

schema.json 128 B

dashboard-template.html 232 KB

dashboard.html 100 MB

districts.csv 37 MB

postalcodes.csv 33 MB

—————————————————————————————————————

cd /proj/2;

svn add *;

svn commit -m "Change 2022-03-01";copy /proj/1/arch/dashboard.20220315.html dashboard.html;

svn commit -m "Change 2022-03-15";copy /proj/1/arch/dashboard.20220401.html dashboard.html;

copy /proj/1/arch/districts.20220401.csv districts.csv;

copy /proj/1/arch/postalcodes.20220401.csv postalcodes.csv;

svn commit -m "Change 2022-04-01";copy /proj/1/arch/dashboard.20220415.html dashboard.html;

svn commit -m "Change 2022-04-15";Under these conditions you will create snapshots of the changes at each point something changed,

reducing the number of snapshots you have to maintain. No change; no snapshot

backups are generated per-working folder, meaning if a restore is required, it only takes the size of the individual project to go back in time.

your backup footprint is reduced because SVN only stores the differences between each snapshot.

name size

—————————————————————————————————————

/proj/2/

.svn/ 13.4 GB

schema.json 128 B

dashboard-template.html 232 KB

dashboard.html 6.7 GB

districts.csv 5.0 GB

postalcodes.csv 1.7 GB

—————————————————————————————————————Note the creation of the folder .svn , this is true for most VCS applications. They must create a control folder for tracking their link to the repository. Also, this folder will contain a single copy of the folder structure to allow it to detect changes, doubling the storage space in the short term. The total storage space in the working location remains largely untouched as the changes are made.

Snapshots can be viewed via SVN’s log command

$ svn log ^/ -qv

--------------------------------------------------------------------

r4 | jeff | 2022-04-15 00:43:13

Changed paths:

M /dashboard.html

--------------------------------------------------------------------

r3 | jeff | 2022-04-01 12:25:08

Changed paths:

M /dashboard.html

M /districts.csv

M /postalcodes.csvAnd if a restore needs to be performed for a user, it is simple to select the historic revision.

mkdir -p /tmp/history;

pushd /tmp/history;

svn checkout -r 3 file:///arch/proj/2 .;

popd;One final benefit is that because the archives are no longer stored with the working copy, a different type of storage can be applied to the backups. Slower storage can be applied to the backups, while faster storage for the working copy.

The Social Aspect

Unfortunately, in many cases of encountering this problem, I have seen the interested parties pointing at one another and saying it is the other person’s fault. Data Analysts are unaware that version control tools exist, and IT departments view backups and restores as whole-system-events for recovering from complete system failures.

The question becomes: who is this article designed for? Data Scientists or System Administrators.

Really, this is for both. Hopefully, both parties work together to reduce costs and burden on the other, but the reality is that a change will likely have to start with the IT department.

Further, reducing costs is not seen as a positive in most organisations. Cost is associated with prestige, managerial resumes often boast about the size of the budget they managed. Reducing the budget reduces prestige.

A Scripted Solution

Given the social problems, the simplest means of introducing users is to simply implement a simplified Version Control practice in an automated fashion, without asking users or offering training or really talking about it.

There are two steps that should be taken:

Immediately install a VCS client on every computer that accesses the system in question

Integrate VCS creation into the project allocation part of the process

On the project setup and allocation side, the process usually starts with a request for space on the computer as a paper form (yes, companies are still filling out paper forms as of 2022–04–10). As this kicks off a large process involving multiple configurations being manually configured, ensure the allocation of a VCS is part of that process. The simplest way to ensure this is done is to automate the entire process.

#!/bin/bashproj="./proj";

arch="./arch";function SetupFolder {

f=$1;

pPath="${proj}/${f}";

aPath="${arch}/${f}";

tPath=$(mktemp -d);

mkdir -p "$aPath";

svnadmin create "$aPath" && echo "Repository created." \

|| echo "Repository already exists ($?)";

svn checkout "file://${aPath}" "$tPath";

mv "$tPath/.svn" "$pPath/.svn";

rm -rf "$tPath";

}{

proj=$(realpath ${proj});

mkdir -p "$proj/00000000";

echo "Project Folder: $proj";

arch=$(realpath ${arch});

mkdir -p "$arch";

echo "Archive Folder: $arch"; for dir in ${proj}/*/ ; do

dir=$(basename "${dir}");

echo "Updating: $dir" 1>&2;

pPath="${proj}/${dir}";

[[ -d "$pPath/.svn" ]] || {

echo " - linking project" 1>&2;

SetupFolder "$dir";

} pushd "$pPath";

(( $(svn status | wc -l) > 0 )) && {

echo " - synchronizing changes" 1>&2;

svn status | grep -e "^\?" | cut -c 9- | xargs svn add {};

svn status | grep -e "^\!" | cut -c 9- | xargs svn del {};

svn commit . -m "$(date -Iminutes)";

svn cleanup;

};

popd;

done;

} 1>/dev/null;This script scans all of the folders and determines if a change has been made. If there are changes, it submits those changes to the archive location, if no archive location exists, it creates one. This can be run on a timer, or better yet, can use inotify to monitor for changes. Better still would be to install a self-serve interface like GitLab that can auto-allocate space and control permissions, but … bureaucracy.

Teaching Users

Secondly, install a highly visible VCS client on every computer in the office. Personally, I tie the installation of the client to the LDAP group that gives basic access to the Data System. By highly visible I mean Tortoise on Windows, or RabbitVCS on Linux. In both cases, the users are automatically presented with icons that tell them something is unique about the folder they have been granted access.

One of three things will happen:

A user already familiar with Change Management Systems will be pleasantly surprised

A user unfamiliar with Change Management Systems will curiously explore this new domain.

The user will not care… “you can lead a horse to water, but you can’t make it drink”.

Once users discover the log feature of the VCS, they become empowered to restore old versions as necessary. This reduces the burden on IT and the instances of manual duplication of files.

A Demonstration

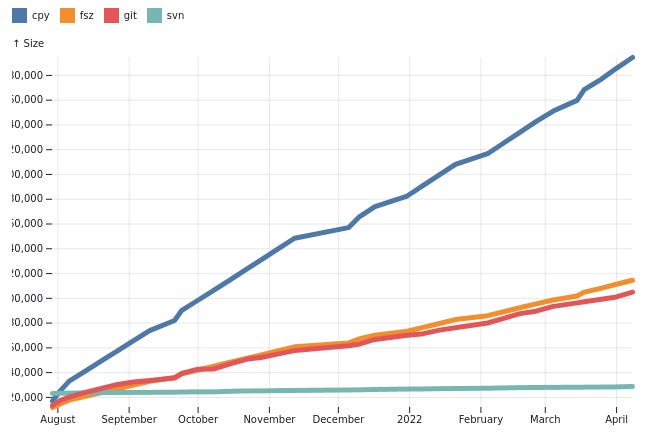

To demonstrate how this all fits together, as well as to compare the compression capabilities of various setups, four versions of the script were created:

Jeff Cave is telling stories about Software

CPY: Makes a copy of the files in the project folders over to the archive location. This is simply to offer a baseline comparison of what our users are currently doing

FSZ: Filesystem compressed. We hope our users use ZIP, so this creates a compressed copy of the folder each time the backup is called

GIT: Git is an excellent VCS, and should be included in any comparison.

SVN: The script that was offered earlier.

dedup2 · main · Jeff Cave / Demos

To simulate the activities our users go through, a script called demonstrate.sh downloads the history of the CIA World Factbook as JSON from GitHub, and stores each change in the project folders, and then backs them up. This is similar to our users receiving updates to their data files and then saving the old version to a backup folder. Results are stored in results.csv.

Demo: De-duplicating Data Storage II

After 34 changes, we can see that Git and Zipped file systems perform almost equally, mostly because that is what Git does (zips of entire file structures), compression offers a lot of savings. By comparison, SVN shows almost no growth at all.

This brings us back to our original problem state: $70,000/year ($56K USD) for storage, but using automated version control, and SVN, in particular, we are able to reduce the storage costs by 90%, to $7,000/year ($5.5K USD), under real-world conditions.

That is a 90% savings in costs, or about $63,000/year ($50K USD).

Most of these estimates are on the small side. Recent experience has shown the involved datasets to change in the range of 40G/quarter, suddenly those numbers begin to be of the scale of millions of dollars.

Conclusion

This technique was applied several times over the decades and has been demonstrated to work with not just text files, but also Parquet and SAS data files, in Tableau and RStudio applications.

Optimisation of these techniques requires cognisant cooperation from both Data and System Analysts, unfortunately, detailed information and change management techniques do not receive the exciting attention of executives. However, a 90% decrease in costs (and therefore an increase in profits) is not something that should be ignored, and attention to these details is important.

For this reason, I have presented these techniques using an automated, but unobtrusive technique, that fosters an environment that can encourage learning and cooperation.

Mostly, these techniques become necessary to reduce cost as an excuse for progress.

… because sometimes bureaucracy gets in the way.

I encourage you to check out the sample from GitLab.

Also, have a look at the calculation worksheet and check my maths. Change the values to represent your workspace. How much can you save?

Remember: cost correlates to carbon. Reducing your consumption is easily measured in dollars, but also represents less pollution

Save a byte, save the environment

Please leave a comment or ask a question, and if you found this content valuable, please remember to click on follow.