How fast is fast enough?

A rambling discussion of the implications of “Real-Time” data

My claim: “real-time” is anything faster than a change can be observed

Years ago, I got into a lunchtime discussion of real-time data processing and a couple of guys at the table started in with the macho attitude:

I used to work on fighter jets … real-time is microseconds

I used to do nuclear weapons testing … real-time is nanoseconds

I used to do solar flare warning systems …

While the time scales became increasingly reduced, I started to realise that my entire perspective was different. I was coming from a Healthcare setting involving patient charts, and in my head, the shortest timescale for transferring information was inter-hospital patient transfers, a process that involved humans reading and interpreting textual information. In this case, the information bottleneck was the time it would take for the patient to arrive at the new site, and staff at the new site to read the chart. (1–2 hours, sometimes up to a shift change)

My slower perspective was backed by one of the other developers at the table. In a previous lifetime, she had developed automated terrorism threat assessments and resource deployment systems (at least this is true in my head … she was always a little vague about what she had done previously). She was doing push notifications, but her bottleneck was the time it took for humans to comprehend the information they had received and to strap on a rifle. She put real-time at 15–30 minutes.

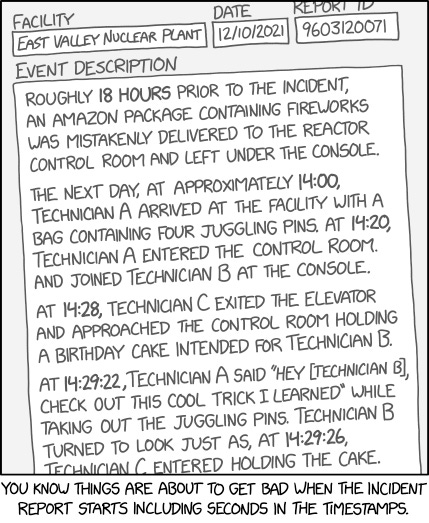

Timestamps

The way we think about time, is often built on a life time of assumptions.

In some database systems, there is a datatype known as a timestamp. A timestamp is a sequential number that is applied to the system. It is not a date, or time, it is a point in time.

There is a very poignant scene in the TV show Angel in which Lorne (the karaoke hosting daemon) is counselling someone after a breakup: “I can hold a note for a long time … But eventually, that’s just noise. It’s the change we’re listening for … That’s what makes it music.”

I have often taken this same point a step further, it is the change of state that defines time; time itself is a perception of changing state. This may be an oversimplification, but in terms of managing data it is a useful one. From the point of view of our system, there has been no change, and therefore no time has passed. As our system does not have the same perception of time as we do, there is no reason it should use our convention of recording time.

In terms of timestamps, this means we should have “Point in time 1”, followed by “Point in time 2”, followed by …

Time is only observable at its smallest division, time’s smallest division is the point of observation.

“Real-time” is what happens when change occurs between the observable points, so that it is available at the next point of observation (or possibly even creates the next observation).

Human Speed

In high school, a friend and I discovered Network Time Protocol (NTP) and the Canadian Atomic Clocks. While reading the user manual put out by the National Research Council, I remember reading the request to not use the most accurate servers.

It was just a friendly request to be polite.

The way the system works is that depending on how accurate your needs are, you are supposed to use decreasingly reliable “stratum” of service. Stratum 1 sits right on top of the atomic clock, Stratum 2 servers update from Stratum 1 (introducing a little bit of potential error), and Stratum 3 servers update from Stratum 2 servers (introducing some more potential error). So, at the time, you were politely asked to use stratum 3 servers to avoid overloading the stratum 1 computers.

Seems fair.

Unfortunately, people are people, and my friend began updating his analogue watch (readable to the minute), by hand, with stratum 1 servers. When I told him stratum three (accurate in the range of milliseconds) was good enough and that he was decreasing the accuracy for everyone, he boldly told me “Nope, only Stratum 1 is accurate enough for me”.

Macho statements aside, it is obvious that the speed bottleneck in the system is one of human scale, not computer scale.

A $10 watch is not going to retain accurate time for a long time. The drift in the network is less than the drift of the watch itself.

An analogue interface, interacting with a human eye, is going to have a read accuracy that is sub-minute, which is perfectly acceptable for the human turning the knob who cannot achieve better than sub-minute accuracy anyway.

The consumer is trying to get to classes, and meetups at the coffee shop, in a timely fashion. The time it takes to physically navigate space between these events introduces variance at the sub-quarter-hour level.

Society does not function at the microseconds.

At the time, the norm was to leave approximately 15 minutes to account for the vagaries of life. This is consistent for two people suffering from 5 minutes of error: 10 minutes of error, plus 5 minutes of agreement. 15 minutes was “real-time”.

Any effort, on my friend’s part, to achieve faster results was a complete waste of effort. At the same time, other people were genuinely being harmed.

Data Scientists relying on accurate time for weather modelling are hurt

Sailors at sea, getting bad weather predictions, are hurt

My friend gets hurt when everyone is annoyed at him for being late because he was fiddling with his watch to get it “really accurate”

Faster than Observed

It is rare that I see anyone reading data more frequently than daily. Even with push notifications, I receive a text message telling me to take action, but I’m happy if I can action it within a quarter business day.

Faster is still better than slower; and real time is faster than the change can be observed.

Many times, in a business setting, an executive or manager or business unit calls for a “monthly” report, the report is therefore run on the 31st; weekly reports are run on Friday.

This is a huge mistake and given automated systems we can do better. Real time is still something we can strive for, and a key benefit stems from the fact that the results don’t change significantly if we achieve “faster than observable” rates.

Since 2010, I have had a trading bot, developed in Google Sheets and JavaScript (I’m cheap and love free compute power), that sends me a text message any time I need to make a trade. The fastest data I receive is 15 minute delayed price data, but the most significant data I receive is published quarterly (Financial Reports). This data must be aggregated into averages and deviations and … patterns. The system is attempting to establish “normal”, and normal (by definition) doesn’t change by much.

This is true for most human scale systems, most of the time

Under these conditions, we are dealing with aggregate data. The changes are aggregated into averages over the scale of days or weeks or even quarters. The assessments are not going to change significantly on an hourly basis. This means that the assessment from yesterday, is probably about the same assessment I am going to get today. I may see a change in the general trend, but it will be subtle and non-actionable in the short term.

There is a massively beneficial implication to this.

If I am producing a weekly report that interprets and advises the business, I should run the report daily. The average of 7 days of business operations is very likely to be similar to the average of 6 days. The results and conclusions of a report produced on Thursday, will likely be the same as the conclusions that will be produced on Friday.

If the Friday run fails, I have my conclusions from Thursday. If my Thursday run failed, I have early notice that the Friday run is likely to fail.

By working at one unit finer of granularity, you have given yourself lead time on potential issues, as well as created a fall-back plan in case of catastrophic failure.

In the past, this has resulted in

~3000 employees (myself included) getting paid for 13 days instead of not getting paid at all

A JIT system (a life-safety service) being able to estimate demand early, so that key staff could attend a funeral

Countless times I did not have to do overtime because a combination of poor

nullhandling and weird data caused fails, but (thankfully) days in advance.

These are same principles given in my High school Math and Physics classes: use one decimal place more to do the calculation, than you report in. In business reporting: if you are tracking dollars, do the calculation in cents; if you are tracking cents, do your calculations in fractions of a penny.

Push and Pull

Push notifications, change the playing field somewhat. Rather than updating the data on a schedule, we advertise changes to interested parties. However we are still constrained to the response time of our slowest observer. Even in the case of detection of nuclear blasts, the point was to log and collect the data for interpretation by humans at a later date.

Take my trading bot: It sends me text notifications almost immediately (magnitude of seconds). This is faster and far more convenient/reliable than me checking once a day, however it does not mean I can respond any faster. Real-time is constrained both by the speed I receive the information and the speed at which I can respond. If I am trapped in a meeting, a secure network environment, or up to my waist in a river while fishing, I may not be able to initiate the trade for a couple of hours.

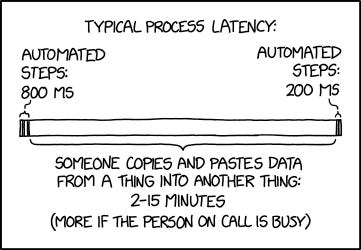

Lazy Loading Improves Net Performance

So if, in most (human) cases, it is sufficient to deal in timescales of minutes or hours, then we can conclude that the the reports do not need to be updated any faster than that.

In general, we can consider that updates do not need to be generated more frequently than they are going to be consumed by the observer (either digital, human, or system). This idea is where push notifications can both help and hurt us.

Polling (regular pulls) of source systems generate needless processing effort. Requesting information comes at a processing cost where both systems need to expend effort talking to one another. If there are no changes to the system, all of that effort results in “no change”.

Push notifications allow us to reduce this overhead by having the source system transmit change notifications to interested parties in the event something actually changes. This means that no processing is performed until something needs updating.

However, given our sub-hour threshold for “real-time”, it is very possible that we may receive notice of change more frequently than we need to report it. We may recalculate a report more frequently than it can be observed.

This was most evident to me in a simple web-app I was recently working on. I wanted the user to receive real-time notification of the correctness of their entry into the form.

Every change to the form results in a change event that is processed by the back-end. I was following the error notifications coming back from the validation, and was trying to type in a valid value to observe the update come back to the form.

I was being driven crazy, I was only 10 characters away from a positive result, but kept getting stopped by each key-press as it recalculated the validity. Each key-press was pushing a notification to the back-end which was triggering a recalculation … but I can already see I was wrong … internally, I was begging the system to just let me finish typing.

In the end, I put a timer on the validation: do not update the validation more frequently than every 23 milli-seconds. That little bit of delay allowed me to finish typing, and the quality of the feedback did not suffer (maybe even improved), by bringing it into the human scale.

Buffering results until someone actually wants it, takes us back to the concept of Lazy Loading. If nobody is going to read your data, don’t bother calculating it. This reduces overhead because you may have 20 updates, but only one view (and therefore calculation).

If you are speaking about push notifications, you should be thinking about lazy-loading. A push notification can be used to notify our system that it needs to update, but we can use Lazy Loading to defer that processing until it is needed. But it is a balancing act. We can defer processing, but also need to balance it with performing it frequently enough.

Conclusion

Most of us are not attempting to detect the oncoming wave of a nuclear blast, nor are we racing ahead of a Solar Flare about to destroy our multi-billion dollar data on our international network infrastructure. Most of us operate on a timescale of minutes or hours, well within the operating tolerances of even the most basic of desktop computers.

Given this we need to remember two key points

Time scales dictate “real-time”

You should always be processing one unit of time smaller than will be consumed

Through a combination of lazy-loading and push notifications, we can scale our response time to an appropriate level. Obviously, an aircraft trying to stay airborne requires a different scale than inventory management in a retail organisation. Inundating humans with data does not improve information uptake.

Having said that, we want to make sure we keep ahead of our audience. We can deliver information faster and more frequently than people need it, there is therefore no reason to have it standing by ready for them when they want it. There is no reason for us to not do our checks and balances well in advance.

The key is balance.

Don’t let sales tactics (and your own ego and macho-ism) make you forget that every solution has its own set of drawbacks, and every solution is subject to the law of diminishing returns. At some point we need to recognise that the problem is solved, and that solution is “good enough”.

Once “real-time” moves past “observable”, getting faster is “wasted-time”.